Better Search Is Everyone's Job

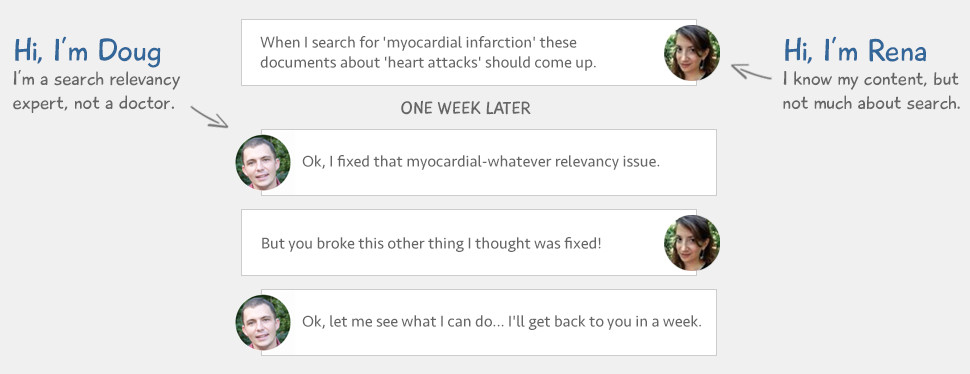

The real secret to search is simple. It requires arming developers with the knowledge of those most familiar with the users - marketing, content curators, and domain experts. Unfortunately most conversations go like this:

Poor interaction between developers and content experts causes search quality to slide backwards. Content experts have little insight into how or why search behaves. Developers, on the other hand, lack understanding of what search should do. Despite their search expertise, they rarely have the skills to know what "relevant" means for your application. Only the user experts carry this knowledge, making every change a developer makes fraught with danger, likely to destroy the relevance of your search results.